Apollo 13 in software

What to do when we have a critical bug in production?

What to do when we have a critical bug in production?

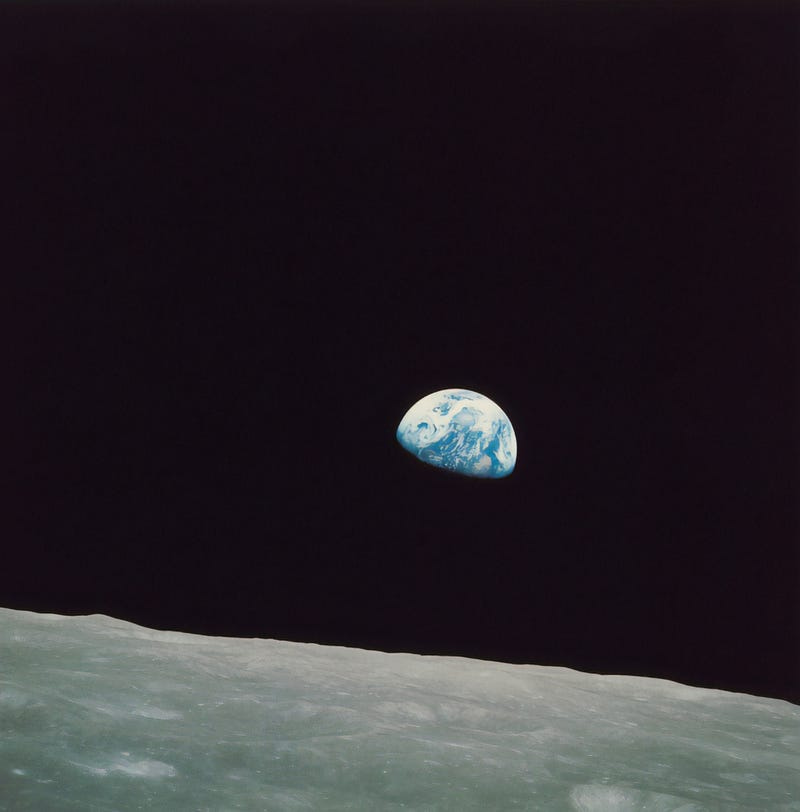

During the mission’s dramatic series of events, an oxygen tank explosion almost 56 hours into the flight forced the crew to abandon all thoughts of reaching the moon. The spacecraft was damaged, but the crew was able to seek cramped shelter in the lunar module for the trip back to Earth, before returning to the command module for an uncomfortable splashdown.

https://www.space.com/17250-apollo-13-facts.html

No one wants to react when we found a critical bug in production that makes us to lose money, but when this is happening, what works and what is usually a big mistake?.

Calm down

First calm down, panicking is synonymous with disaster. If you are not the people who can solve the issue, pressure is useless. Please don’t be left behind people watching their screens. The only effect of that is pushing people to commit mistakes or even worse to not take any decision.

Communicate with your stakeholders

This step reduces a lot the stress in managers, stakeholders, people in general that are concerned about this but who cannot do too much to solve it. Telling them that we are working on it and that we will tell them more when we have more information is enough.

Regular communication is also a critical thing, transparency is very important in these scenarios. Spending some minutes explaining regularly the situation of the bug is a good thing.

But we cannot be talking with everyone, it’s a good practice to have a spokesperson in charge of the communication with stakeholders.

Understand the problem

To be able to solve anything we need to understand it, we have to understand what is happening. So we need to talk with the people that found the bug, trying to understand the steps to reproduce it and the desired behavior.

The best way to understand each other is in a face to face conversation, if this is possible go there with other devs, so you can discuss later about your understandings.

We need to be able to reproduce the problem, to identify how to solve it.

The next thing is to stop bleeding if the bug is urgent and important. Not urgent or not important means business as usual.

Rollback if possible

If the problem is not an easy thing to solve, we have identified the problematic version of our code, and we can accept to lose the feature shipped in that version then rollback your version.

Can you easily answer those questions?.

Can you easily rollback to a previous version?.

How difficult is to do it?.

This is one of those moments where you want to have:

- toggles in place, one click and the feature is off

- fast pipelines

- good knowledge of what has been shipped.

DevOps culture can help, You build it, you run it.

Create a test to validate that the bug is solved

Once we understood the problem, and we know how to reproduce it, we need to write the lowest level automatic test able to probe the bug.

This test needs to fail, don’t write any production code until you have that test failing. The tests pass only if the desired behavior happens in the system.

The failing test probes that the bug is happening. Once the tests fails, we can write the production code to make it pass.

Once the test passes, we have fixed the bug.

Deploy your fix to production

The best thing is using your normal process to deploy to production, if you are not able to do that is because your path to production is too much complex. Think about it and how to solve that, your path to prod should be fast enough to use it in an emergency.

Evaluate how to manage the effects caused by the bug

Think about the effects of the bug, how to calculate the damage, and what to do to minimize those effects.

Sometimes a bug in production means to fix the whole state’s consistency. So perhaps some events were not processed so other services or systems are not synchronized between them.

Trace a plan if the damage is higher to fix this issue, this can mean to communicate with clients and say sorry.

Be prepared

To be good solving problems in production, we need to be prepared. We need to understand our problems, the 4key metrics can help us on this.

Mean time to restore and deployment frequency are keys to minimize the cost of that bug in production.

Mean time to restore: Time since someone is paged–either automatically or manually–when an outage occurs until the outage is resolved.

Deployment frequency: Is a simple counter incremented whenever someone initiates a deploy and a provided time window.

Mean time to restore is super related with having a good monitoring system able to warn us even before clients are affected. Investments in observability worth it.

Deployment frequency is also a great measure of how familiar we are with our deployment process.

Practice makes a master, so deploying regularly will help us to understand what we have to do, but also will help us to deploy small features and giving us more hints of which is the problematic deployment.

Learn

Learn from the incident is key. Blameless postmortems help a lot here, but they need to be blameless. Please don’t blame, blaming will only create an environment where people will be afraid of changing the system.

There is no zero risk about bugs, we can do things to reduce them a lot to a ridiculous level, but those practices require fearless:

Refactoring mercilessly

Deploying more frequently

Writing automatic tests