Fundamental Theorem of Software Engineering

This theorem in fact marks when it's better to stop trying to add a new indirection level and when you still can. It's part of what I call Software Physics.

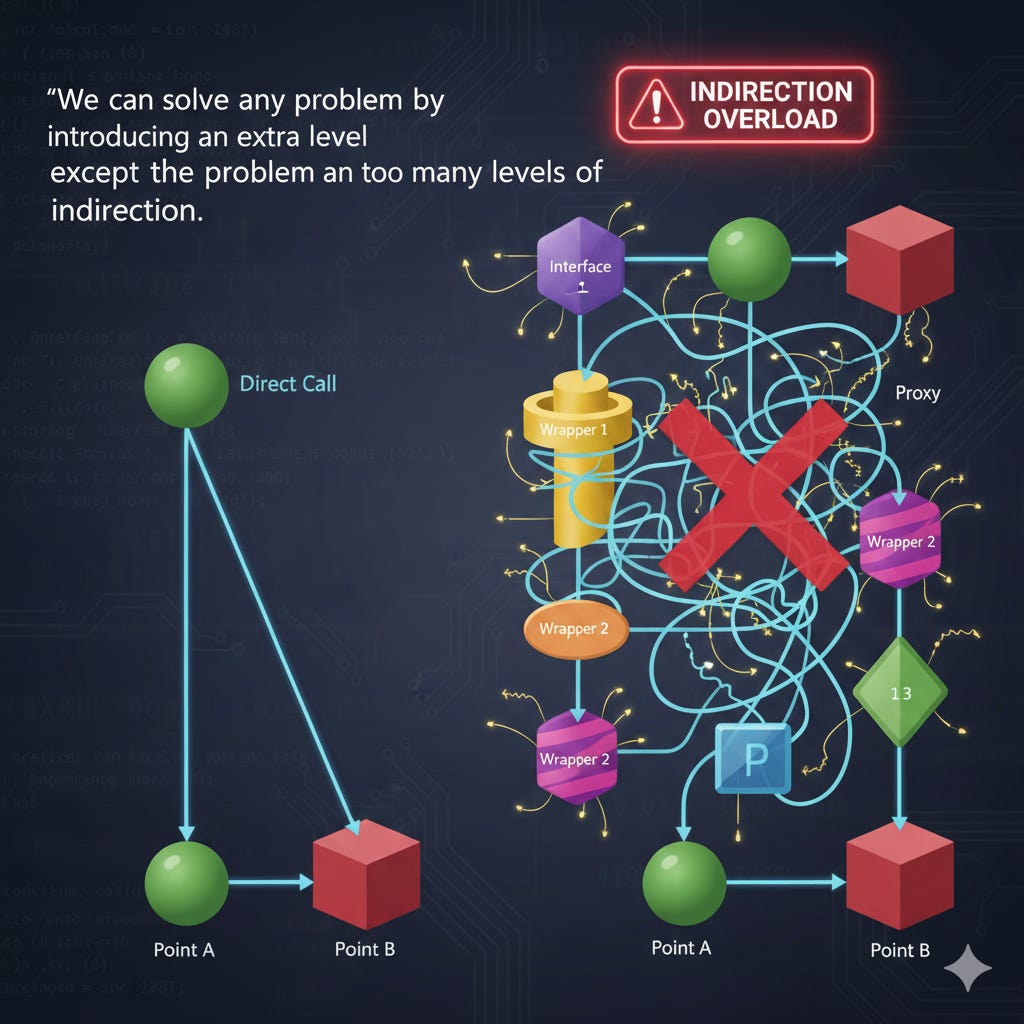

We can solve any problem by introducing an extra level of indirection, except for the problem of too many levels of indirection.

wikipedia

This quote is what basically happens in software nowadays. 90% of the problems have been solved, adding a new level of indirection. But as the quote says, there is a limit and that limit helps us to understand that we need to stop and refactor our code taking into account all the parts together.

The question is how to know that we have too many levels of indirection?

Too many levels of indirection

Everyone knows the decorator pattern, it’s a great design pattern because it helps to add extra behaviors to a preexisting one.

Decorator is a structural design pattern that lets you attach new behaviors to objects by placing these objects inside special wrapper objects that contain the behaviors.

But everyone knows also the “Law of the instrument”

If the only tool you have is a hammer, it is tempting to treat everything as if it were a nail.

Then the question is when to stop, because by default adding a new level of indirection is right.

My suggestion is when the bill is not paid anymore:

When you have a bug, and you need hours to solve it when is a really simple thing.

When you cannot easily say what’s the next line of code that is going to be executed and this happens too frequently.

When you see that your abstractions are too leaky.

When you have visual performance issues.

For all the above things you can create fitness functions to react when they trespass a threshold.

We can summarize all of this on the idea that the required cognitive load is too high.

What to do?

If you achieve that threshold, you realized it is better to rethink what we did taking into account all the variables. It’s time to pay the tech debt we have in front of us.

Remember tech debt is not something you can avoid, and probably you use the term wrong. Tech debt is the delta between what you know now and what you have in the code.

Why refactoring and not starting from scratch?

The devil is in the details. And you probably will not have into account all the stuff done in that part of the code, you probably have a good idea, but you don’t know everything.

The code, the envs, your tests will give you feedback about your refactor if you do it in small steps.

So usually it is better to use refactoring to remove too many levels of indirection than removing everything starting from scratch.

How to do it?

One way of removing too many levels of indirection in your code is the “inline function” refactor. We are going to use this refactor to understand what is under the hoods and to plan the small steps of our refactor.

In almost all the modern IDEs the inline function is an automatic refactor, this refactor will move the logic of that function to the callers of the function.

We can usually select where to inline and if the method must be maintained or removed.

Select the safer option, we just want to understand what is in the code.

If you have the code spread in different classes, and it is more difficult to inline the code, you can:

Remove the collaborators injection and create the collaborator inside that class.

Copy the class methods to the caller, because now you have the attributes in your caller method/function to call the new one.

Use the new methods in your highest function.

Inline the methods.

Once you inline everything in your caller function you will be able to see in one place what was done. It’s easier to understand what a use case is doing when you don’t need to open multiple files and the function to read is not massive.

Now that you can easily see what has been done:

Think about what is the right thing to do

Trace a plan to refactor the code to go in that direction

Apply the plan

Adjust the plan as you go

Repeat

Separation of Concerns

The idea that coupling sometimes makes easier to read and understand code is in fact an uncomfortable truth for a lot of architects. It means that not always smaller pieces of code reduce complexity. Remember coding is about selecting your tradeoffs.

Coupling is not bad by itself what it is bad is coupling things that need to change by different reasons, this is why Separation of Concerns is critical:

Let me try to explain to you, what to my taste is characteristic for all intelligent thinking. It is, that one is willing to study in depth an aspect of one's subject matter in isolation for the sake of its own consistency, all the time knowing that one is occupying oneself only with one of the aspects. We know that a program must be correct and we can study it from that viewpoint only; we also know that it should be efficient and we can study its efficiency on another day, so to speak. In another mood we may ask ourselves whether, and if so: why, the program is desirable. But nothing is gained—on the contrary!—by tackling these various aspects simultaneously. It is what I sometimes have called "the separation of concerns", which, even if not perfectly possible, is yet the only available technique for effective ordering of one's thoughts, that I know of. This is what I mean by "focusing one's attention upon some aspect": it does not mean ignoring the other aspects, it is just doing justice to the fact that from this aspect's point of view, the other is irrelevant. It is being one- and multiple-track minded simultaneously.

Edsger Wybe Dijkstra (On the role of scientific thought, 1975)

Just remember what we were saying at the beginning of this article.

We can solve any problem by introducing an extra level of indirection, except for the problem of too many levels of indirection.