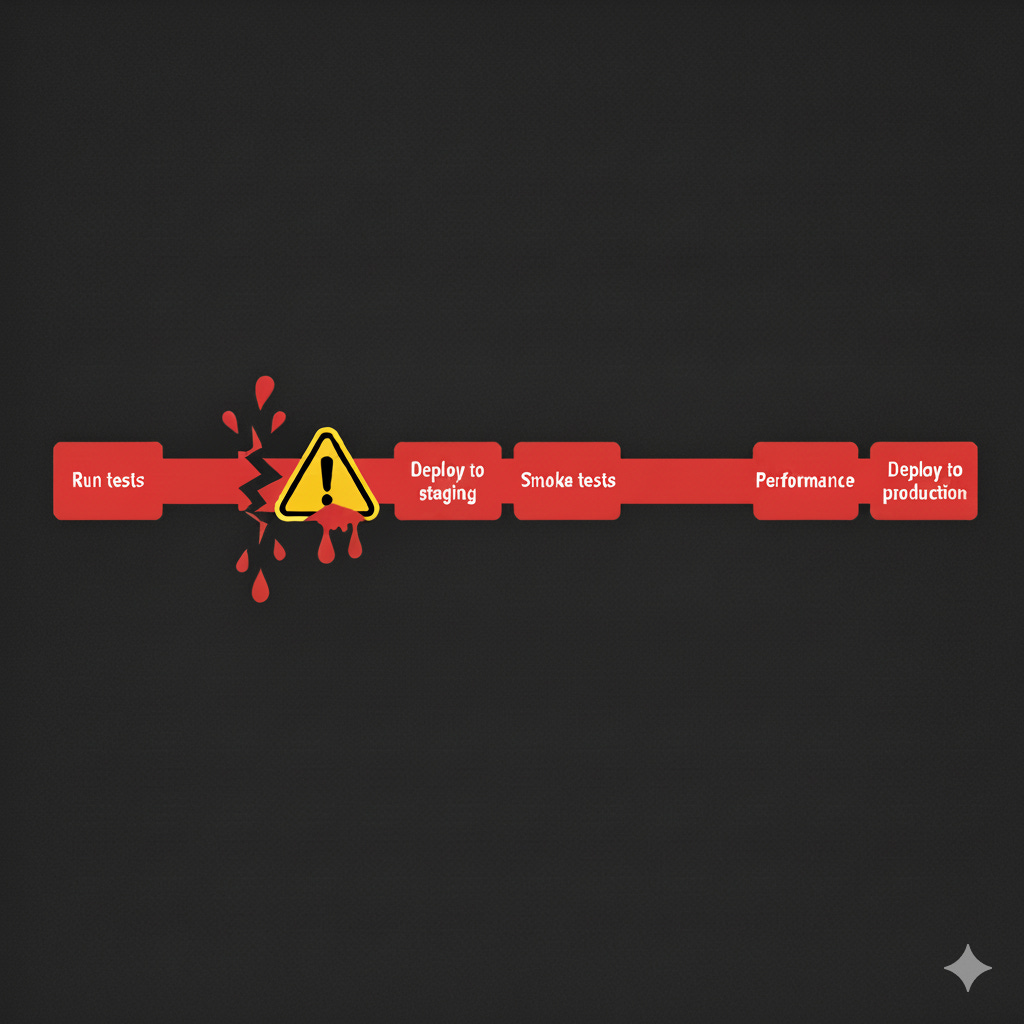

The broken pipeline

People think that they need to avoid breaking the pipeline, it's not exactly like that. It's much better to be fast, fixing it.

The deployment pipeline is a machine in charge of certifying that our code is in a releasable state, and then automatize the deployment to the different environments we have. Please for your mental health try to have at maximum two environment, one for doing CI and production or just production.

When the pipeline is broken, the main task to be done for the team is fixing the pipeline. I mean with a broken pipeline anything that makes your pipeline not progressing until production.

But this statement is usually misunderstood as “we cannot break the pipeline”, so to avoid people errors because we cannot break the pipeline we introduce bureaucracy, basically manual gatekeepers.

One of the biggest mistakes is believing that creating more environments or having PRs as a mechanism to be sure that the code is working fine is for free.

It’s not, all those things introduce delays, misunderstandings so we introduce bigger problems that the one we are trying to solve, the broken pipeline.

Avoiding errors is not possible, what we can do instead is being fast solving errors, being fast detecting errors, detect errors earlier. Our intention is being almost always green.

Being always green

One of the things people always repeat when having a CI server is the idea of not breaking the build. This means to avoid pushing code to the mainline that has not enough quality to pass the pipeline steps. Sometimes we think that to avoid this, we have to put a lot of bureaucracy in front of our commits. But what if we try to not breaking anything at a…

Let’s say that instead of avoiding breaking the pipeline, I push for a pipeline with the following characteristics:

Fast to run the whole pipeline, from commit to prod, in less than 20 mins.

Devs monitor the pipeline, and if the commit fails, it’s reverted immediately.

Our tests are almost deterministic, no flakiness or a really low rate, less than 1 per 100 of executions.

No one push code to remote main if the pipeline is red.

The pipeline is less than 5–10 minutes red. Time to fix the pipeline is key, first fix the pipeline, later think about your code. Best thing usually to do is to revert the commit, basically we have to unlock devs.

There is always someone in charge of fixing the pipeline if it’s red.

Devs run all tests locally with a high frequency, at least once per commit, not all of our tests are run locally. In which stage to run which tests depend on how fast they are.

Each commit is tested.

This means to forget about using PR’s, multiple environments or multiple pipelines to solve some of the problems you have because you decide to:

Invert the test pyramid, and make your tests slow, making it a nightmare to do a change.

Have multiple preproduction environments to isolate people, don’t do that, put everyone in the same environment.

Isolate people with the intention of having more “stable” environments.

It’s better to make people being responsible for their changes and stress that if one person/pair breaks the pipeline for some of their changes, they have to fix it as the next thing to do, as fast as they can. If this means to revert their commits, let’s revert the commit.

This makes everyone to check the pipeline, to start thinking what will happen when those changes are tested by the pipeline. This is shifting left quality.

In this scenario when people care about changes, where the pipeline is fast, where a red pipeline is fixed in minutes we will see that the pipeline is green almost always.

Avoiding breaking the pipeline usually makes pipeline to be red during more time.